GCP Flow Logs Configuration

Overview

GCP VPC Flow Logs allow network traffic to be monitored and used for analysis. VPC Flow Logs cover traffic from the perspective of a VM. All egress (outgoing) traffic from a VM is logged, even if it is blocked by an egress deny firewall rule. Ingress (incoming) traffic is logged if it is permitted by an ingress allow firewall rule. Ingress traffic blocked by an ingress deny firewall rule is not logged.

Vendor reference information is available in this GCP topic: Use VPC Flow Logs.

Not every packet is captured into its own log record. However, even with sampling, log record captures can be quite large. You can balance your traffic visibility and storage cost needs by adjusting the following aspects of logs collection:

- Aggregation time interval: Sampled packets for a time interval are aggregated into a single log entry. This time interval can be 5 sec (default), 30 sec, 1 min, 5 min, 10 min, or 15 min.

- Log entry sampling: Before being written to the database, the number of logs can be sampled to reduce their number. By default, the log entry volume is scaled by 0.50 (50%), which means that half of entries are kept. You can set this from 1.0 (100%, all log entries are kept) to 0.0 (0%, no logs are kept).

- Metadata annotations: By default, flow log entries are annotated with metadata information, such as the names of the source and destination VMs, or the geographic region of external sources and destinations. This metadata annotation can be turned off to save storage space, but doing so is not recommended because it reduces the usability of the information that is pulled into the portal.

Enabling the Cloud SDK Command Line Interface

Enable the Cloud SDK Command Line Interface, which is required for this setup. Click this link for more information.

Creating a GCP Logging Bucket

Best practices dictate that a centralized logging project be used for long term storage of VPC Flow Logs. This bucket will receive the VPC Flow Logs, and will be the source for the ingestion process. Multiple projects and VPC Flow Logs can go into a single bucket, or separate buckets can be used for separate projects. Separate buckets make it easier to distinguish between GCP projects; however, a single bucket is easier to set up and configure. Both methods are supported.

Default options for bucket creation should be sufficient unless there is a specific need to use different options.

To create a GCP Logging Bucket:

- Create a new bucket by following the steps in this GCP procedure: Create a bucket.

- Record the bucket name. You will use this value in a subsequent procedure.

Create the bucket in a project of your choosing. The bucket does not need to exist in the same bucket as the source systems being monitored.

Assigning Read Permissions to the GCP Logging Bucket

The logging bucket now needs to have access enabled to allow the portal to pull the VPC Flow Log files via a standard account.

- In the GCP console, in the Navigation menu, select Cloud Storage, and then select Buckets.

The Buckets page appears. - Select the bucket where the logs will reside, and then click Edit Bucket Permissions.

- Ensure that View By: MEMBERS is selected, and then click Add.

- Paste the text for the following service account in the Add members box.

activeeye-pull-files@activeeye-prod.iam.gserviceaccount.com - Select the Storage Object Viewer permissions.

- Click Save.

The permissions are assigned.

Providing Account Information to Your Service Representative

Before log collection can begin, your ActiveEye Engineering service representative must grant permissions to enable the generation of notifications related to the buckets. Provide the bucket name(s) and the project number of the project containing the bucket(s) to your service representative. To find the project number, follow this GCP procedure: Identifying projects.

Creating the GCP Flowlogs Service Connector

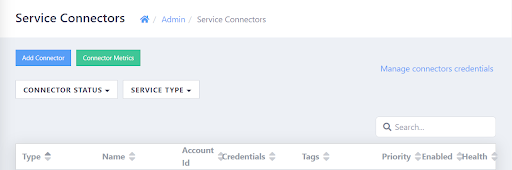

- In ActiveEye, in the left pane, click Admin, and then click Service Connectors.

The Service Connectors page appears.

- In the upper-left corner of the page, click Add Connector.

A list of service connectors appears.

- Scroll down to the CLOUD INFRASTRUCTURE section, and then, in the GCP Flowlogs subsection, click the Add Connection button.

The Add Connector Account page appears. - In the Display Name box, enter a unique name.

- In the Resources box, enter information to connect the ActiveEye service connector to either the GCP project that contains the service account created in the previous procedure, or to the organization containing that project. To do so, enter the resource type and ID separated by a forward slash, as seen in these examples:

- projects/[PROJECT_ID]

(e.g., projects/ExampleID) - organizations/[ORGANIZATION_ID]

(e.g., organizations/ExampleID)

- projects/[PROJECT_ID]

- Optionally, modify the priority level in the Priority box. Raising or lowering the priority will increase or decrease the visibility of alerts related to this service connector.

- If you do not want data ingestion to begin immediately once cloud accounts have been configured, clear the Enable Account checkbox. Otherwise, leave the checkbox selected.

- Click Add.

The GCP Flowlogs service connector is created, and the page is refreshed.

There is a limit of one resource definition per GCP service connector. To monitor multiple resources, you will need to create multiple GCP service connectors.

Enabling VPC Flow Logs

Now that the infrastructure is ready for processing, the following steps will need to be completed for each project on each VPC to enable logging:

- To enable logging, follow the steps in this GCP procedure: Enable VPC Flow Logs for an existing subnet.

- Refresh the VPC networks page and confirm that On is displayed for all subnets in the Flow logs column.

- Repeat this process for each project on each VPC.

Configuring Flow Logs Sink to Cloud Storage Bucket

Now that the logs are enabled, they need to be directed to the preconfigured bucket for collection.

- Enable logging by following the steps in this GCP procedure: Create a sink.

As you complete the linked procedure, enter information as follows:

- In the Select sink service box, select Cloud Storage bucket.

- In the Cloud Storage bucket box, enter the name of the bucket you created.

- In Choose logs to include in sink, to include all VPC Flowlogs in the project, enter the following:

log_id("compute.googleapis.com/vpc_flows")

Flow log files will populate the bucket approximately once an hour.

Configuring GCP Bucket Notification

This will enable notifications of new log files for processing. This setup can be referenced in the following GCP documentation: Configure object change notification.

This configuration will fail unless permissions have been granted to enable the generation of notifications as described in the Providing Account Information to Your Service Representative section of this topic. Additionally, log collection will not begin unless the service connector has been created for the project.

This action cannot be completed via the GCP Console; it must be done via command line tools. See the Enabling the Cloud SDK Command Line Interface section of this topic.

- To configure notifications, replace [bucket name] in the text below with the name of your bucket, and then run the command in the scope of the project that owns the bucket:

gsutil notification create -t projects/activeeye-prod/topics/activeeye-gcp-flow-log-ingest -s -f json -e OBJECT_FINALIZE gs://[bucket name]

Repeat this for each source bucket.