MySQL

Version Support Notes

MySQL can be run on several different platforms or within various types of Cloud Infrastructure. Not all of the possible source platforms are currently supported. At present these source platforms are supported and included in the documentation below:

- Google Cloud Platform - MySQL Instance

Architecture Overview

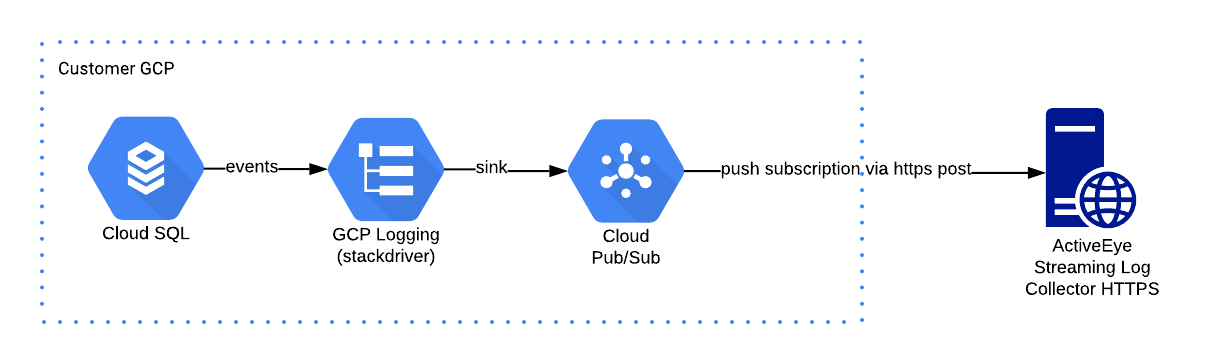

MySQL logging events initiated from the GCP Cloud SQL service will be sent to ActiveEye via the Push Subscription method to a log ingestion endpoint. An api key will be part of the URL path that will serve as both a method of authentication as well as to identify which Service Connector in ActiveEye to associate the log data with.

The following diagram provides a high level overview of this method of log delivery.

Setup MySQL Service Connector

In the portal, create a new MySQL Service Connector for a single MySQL database that needs to be monitored. An endpoint URL with an API token will be generated for this connector. This URL will be used later in the setup.

Save the generated URL for use in the sections below.

It is recommended to setup one Service Connector per database as Google Cloud uses quotas to limit volumes.

Google Cloud Platform Configuration

Configure the Pub/Sub to Forward Logs

To enable log forwarding perform the following steps:

- Go to the Pub/Sub console and create a new topic.

- Give the topic an explicit name and save

- Go to the Pub/Sub topics overview page.

- Select "Subscriptions" from the navigation (triple bars) on the left.

- Create a subscription ID and select the topic created in Step 2.

- Select the "Push" method and enter the URL for the Service Connector from the previous section.

- Configure any additional options, such as Subscription expiration, Acknowledgement deadline, Message retention duration, or Dead lettering.

- Hit Create at the bottom.

A Pub/Sub topic and subscription should be done for each Service Connector created using an understandable topic name possibly referencing the database or Service Connector name.

Export the database logs from GCP Logging to the Pub/Sub

To push the database logs to a created pub/sub perform the following steps:

- Go to the GCP Logging page and filter the logs to the database. These are sample query formats that should use the specific [PROJECT_ID] and [DATABASE_ID] information.

-

Cloud SQL MySQL error logs: resource.type="cloudsql_database" AND log_name="projects/[PROJECT_ID]/logs/cloudsql.googleapis.com%2Fmysql.err"

-

Cloud SQL MySQL-based databases: resource.type="cloudsql_database" AND resource.labels.database_id="[DATABASE_ID]" AND log_name="projects/[PROJECT_ID]/logs/cloudsql.googleapis.com%2Fmysql"

The actual log_name appears to be "projects/[PROJECT_ID]/logs/cloudsql.googleapis.com%2Fmysql-general.log" even though the above query is what Google provided. Verify how they are setup in the environment and adjust accordingly.

- Click "Create Sink" and name the sink accordingly.

- Choose "Cloud Pub/Sub" as the destination and select the Pub/Sub created in the previous section.

The Pub/Sub can be located in a different project.

- Click "Create" and wait for the confirmation message to show up.

It is possible to create several exports from GCP Logging to the same Pub/Sub with different sinks. This can be used for multiple sources of data like the error logs and database logs noted above.

Monitor the Log Forwarding

Pub/subs are subject to Google Cloud quotas and limitations. If the number of logs you have is higher than those limitations, it is recommended you split your logs over several topics, using different filters.

To be automatically notified when you reach this quota, activate the Pub/Sub metric integration and set up a monitor on the metric gcp.pubsub.subscription.num_outstanding_messages. Filter this monitor on the subscription setup to make sure it never goes above 1000.